Background and integration of tracking systems in arivis Vision4D.

Introduction

Object tracking is the process of identifying objects and features and measuring changes in these objects over time. It is different from looking at a simple before/after, 2-timepoint dataset and measuring macro changes. Instead, tracking focuses on dynamic changes of specific objects through a series of consecutive time points to better understand dynamic processes affecting the changes that can be seen from start to finish.

Tracking systems take a series of images taken over multiple time points, and then try to identify the same specific objects or features from one time-point to the next so that we can measure and monitor specific changes.

Examples of tracking applications include:

- Calcium ratio experiments - We monitor fluorescence intensity changes between cells

- Contact analysis - We track specific cells and try to identify the frequency and duration of contact events with other structures in the image, like blood vessels or other cell types

- Lineage tracking - Monitor where embryonic cells are, how often they divide and where the children of these divisions go

- Wound healing - Measure the speed at which cells migrate to close a gap between to separate cell tissues

And many others besides. But what all these applications have in common is that we are monitoring how a specific feature (wound area, velocity, intensity, etc) changes over a time series.

The process of track creation can generally be considered as two separate processes:

- Identifying and marking specific objects or features

- Correlating these objects over the course of the time series to create a special group we call a track.

Both of these tasks can be carried out in a variety of ways depending on the specific application.

For example, object recognition can be done through automatic segmentation of an image, or it could be done by creating a simple region of interest and duplicating that region over all the available time points. Tracking can be done manually by a user making interpretations of the image data, or algorithmically by identifying segmented objects from one time-point to the next.

with this in mind, the tracking accuracy is highly dependent both on the ability to recognize the same object accurately from one time-point to the next, and the ability to recognize the objects in each time point individually in the first place. Both of these are highly dependent on the quality of the image data and the sampling frequency.

Image quality

Because tracking is dependent on the ability to recognize objects over time, it is first, and foremost, dependent on the ability to recognize objects. This means that the image quality must be good enough for segmentation algorithms to work, or at the very least for manual identification of objects by the user.

Segmentation itself is the process of identifying which pixels in an image are part of objects, and also of identifying which pixels belong to which object so that touching objects can be separated. The simplest form of image segmentation is known as thresholding. This is where we set a rule that all pixels with an intensity either above or below a specific threshold are classified into the Objects pixel class, and the rest are classed into the Background class. Then we can just say that contiguous groups of pixels are individual objects.

Several aspects of images can make this process more or less difficult. For example, a noisy image will have a lot more punctual variation that could end up with a lot of single-pixel objects or very rough object outlines. Also, some modalities can be particularly better or worse suited for segmentation. Fluorescence images are generally easier to segment compared to phase contrast or DIC images.

this image, for example, is quite challenging for segmentation using thresholds. There are variations of intensity inside what might be considered objects that could cause the head and tail to be separated. The objects themselves are out of focus so their boundaries are imprecise which could lead to difficulties in separating objects in close proximity, and there are big variations in the background intensity which could make a simple threshold difficult with some pre-processing.

The same sample images under different conditions (e.g. darkfield illumination) could make the segmentation comparatively much easier.

Sampling resolution

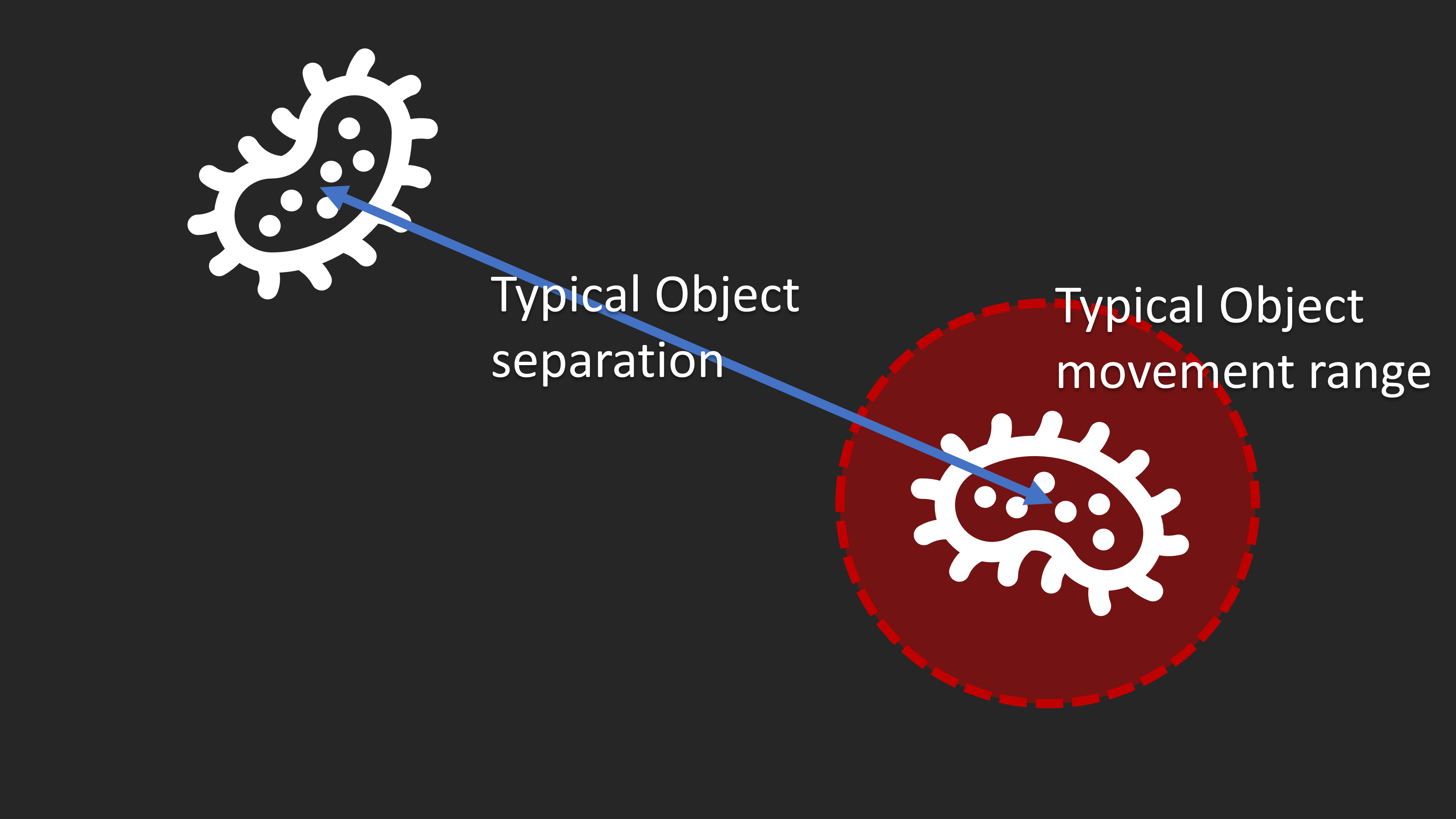

Along with image quality being a very significant factor in segmentation, sampling resolution is also critical for correct tracking. If the movement is too great compared to the typical object separation, correct identification of movement can be challenging or even impossible.

![]()

If, however, we can increase the acquisition frequency so that the movement from one time-point to the next the confidence in the correct identification of tracks improves significantly.

![]()

As a rule of thumb, it is generally preferable to take images frequently enough that the typical movement of objects from one time-point to the next is no more than 20% of the typical distance between neighboring objects.

Similarly, in cases where we are looking to monitor intensity changes, it's important to take images frequently enough to measure those changes accurately. If those changes are rhythmical or affected by rhythms in the sample (e.g. heartbeats), the acquisition frequency for each time-point should be at least 4 times higher than the frequency of changes in the sample.

All this taken together means that in many cases tracking will not be possible and looking at macro before/after changes may be the most suitable type of analysis. Also, in many cases, the sampling frequency needs, coupled with the exposure time needed to capture images of sufficient quality may restrict the acquisitions to single planes thereby limiting the ability to measure changes in 4D.

Segmentation

Having acquired images for the purpose of tracking, the first task will be to establish an effective segmentation strategy. Several factors can complicate good segmentation throughout the time series. For example:

- The signal or background intensity may change throughout the time series

- Objects coming into contact with other objects will need to be effectively separate

- Object morphologies may change significantly throughout the time series resulting in the need for relatively permissive filters.

All of these factors can, to some extent, be handled by image and object processing algorithms.

Intensity tracks

First, we can consider some simple cases where objects are more or less stationary and we are simply interested in intensity changes. In such cases, we can simply segment the objects of interest from a single time-point and clone these over the entire series.

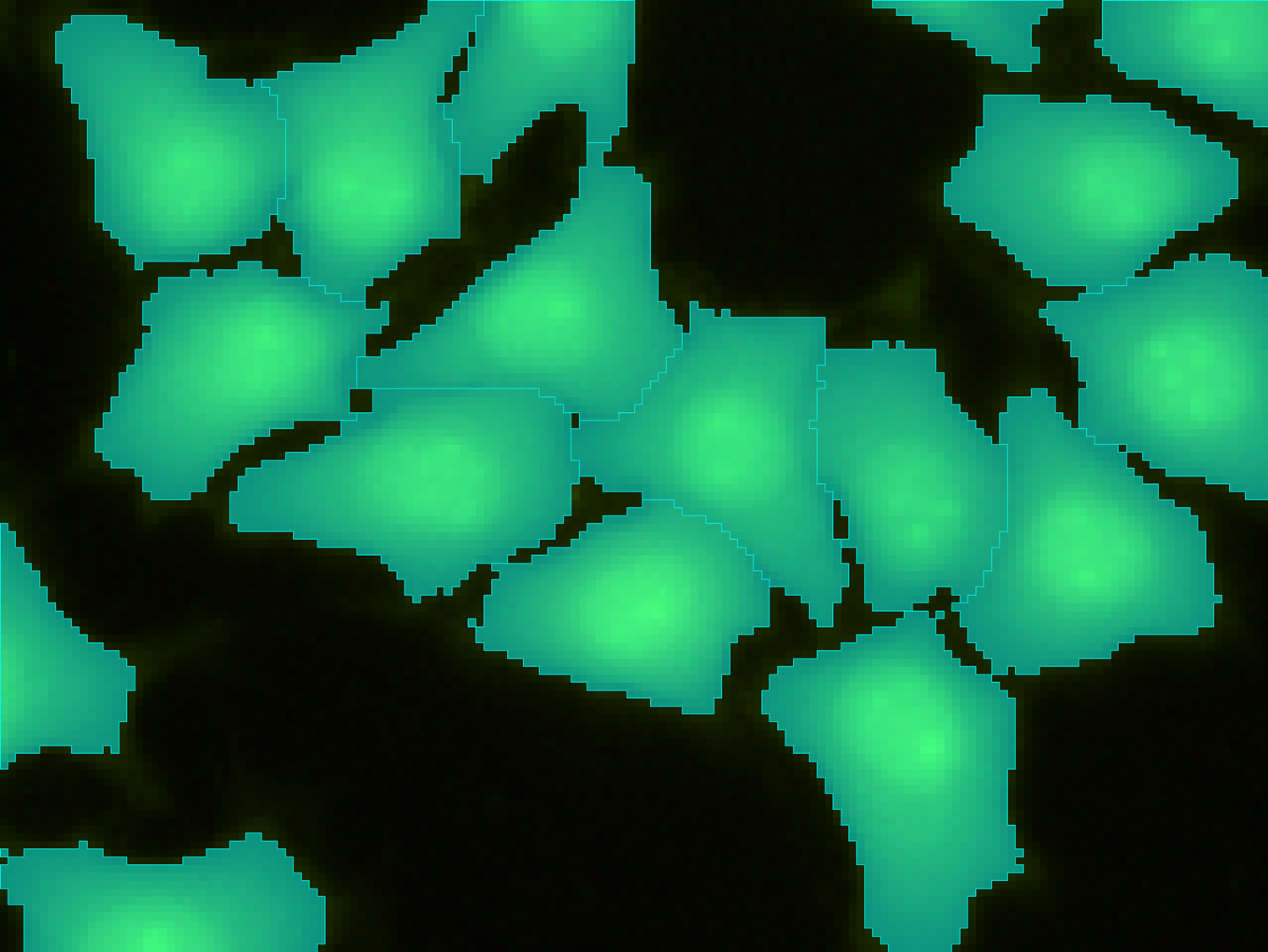

In this example, the first time point shows all the cells:

We can use this time point to mark all the cells we are interested in. In this case, a Watershed segmented works adequately, although some smaller objects are incorrectly included:

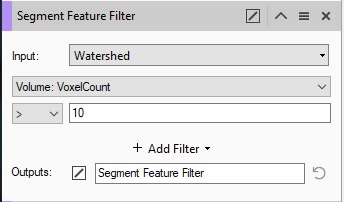

The segmentation can be followed by a Segment Feature Filter to specifically label only those objects large enough to be considered:

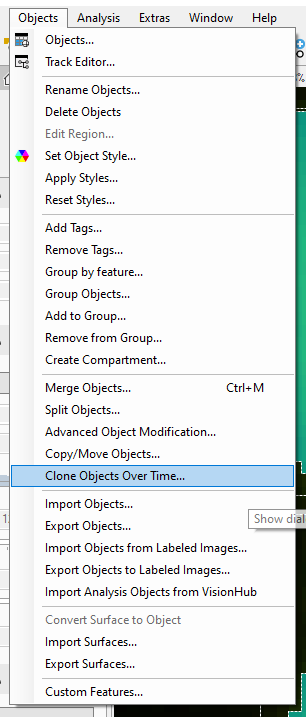

Because the intensities change throughout the series we can't use the same segmentation parameters for every time-point, but since the cells don't move we can simply clone the segmented objects over the entire time series from the Objects menu.

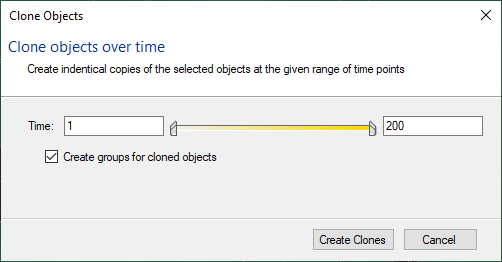

By using the option to create groups for the cloned objects we can easily plot how individual cells change intensity in the time series.

In the Objects table, the Master-Detail view allows us to display the groups in the top table, and the values for the cells at each time-point in the bottom table. Then the Charts can be used to plot any feature of those cells, in this case, mean intensity.

If automatic segmentation of all the cells of interest is not possible from a single time-point, the Projection viewer can be used to create a maximum intensity projection of the entire series over time. The resulting image can be used to segment the cells and then the objects can be copied over to the original time series.

If no automatic segmentation is possible then manually annotating each cell (either from the first time point or the maximum intensity projection), is also possible.

Segmenting objects for tracking

Correct segmentation is crucial for successful tracking. An error of as little as 5% can mean that the mean correct track length would only be about 20 time-points. Of course, even incomplete tracks can still provide valuable information and some degree of error is inevitable, but generally, the better the segmentation, the better the tracking results.

Some common problems and solutions in segmentation include:

- Mean background or signal intensity changes over time - Use a normalization filter to even out the intensity range

- Noisy signal - Use denoising filters (a median filter can provide a good compromise between speed of processing and edge preservation)

- Uneven background signal - The "Particle enhancement" denoising filter, or "Preserve bright objects" Morphology filter can both isolate bright features from their immediate background

In many cases, the Blob Finder segmenter can over all of the above issues in one operation.

However, as stated above, the accuracy of the segmentation is particularly important for good tracking. Since tracking algorithms will typically try to find corresponding objects in timepoint n+1 for any objects in timepoint n, minimizing the number of incorrect or missing objects is particularly important. As such, there are typically two main problems with segmentation with regards to tracking:

- Objects that are segmented but shouldn't, or vice versa

- Objects that are incorrectly split, either splits that shouldn't exist or splits that didn't occur

Often the segmentation step will create objects that need not be tracked. In most cases, the objects to be tracked tend to have some common features that can be used to identify them from those that do not. We can use the Segment Feature Filter operation to tag only those objects we want:

Problems with splitting can usually be dealt with either by refining the segmentation method, either using different splitting parameters, or even a different segmentation operation, together with segment feature filters. the main aim is to avoid situations like the one here where, depending on the settings used, the tracking algorithm may need to decide what to do with two objects where there should only be one, or vice-versa.

Perfect segmentation is highly unlikely with non-perfect images, but we should strive to reduce the potential sources of error.

Tracking segmented objects

Once the segmentation has been optimized, we can add the tracking operation to our pipeline.

The tracking operation offers a range of parameters that must be set according to the needs of the analysis. As stated above, when tracking we identify objects at every time point and then try to establish the connection between these objects. What is allowed or not is depending on the parameters of the operation. Broadly these parameters tell the algorithm:

- How the objects are likely to move - are they likely to move in a more or less straight line or is the movement more chaotic?

- How far they are likely to move - please see above regarding sampling resolution

- How much do we expect the objects to change - do we expect to mostly retain their shape/intensity, or change, are they expected to merge/divide?

Motion type

The main aim of specifying the motion type is to facilitate the correct identification of objects from one timepoint to the next. When several objects are moving around in 3D space, it is quite likely that at some point ambiguous situations arise when multiple candidates might be considered as the tracked object.

In Vision4D, 3 methods of motion detection are available:

- If the objects are generally moving in a straight line then a linear regression algorithm is best. that way, if two objects come into close proximity from differing directions we assume that the one most aligned with the previous movement is most likely to be the same object. Of course this method doesn't perform as well if the objects don't move in a straight line.

- If the objects generally move forward, but sometimes veer in one direction or another then the Conal Angle approach will be better suited. In this case, we define the conal angle and assume that the objects within this angle of previous movements are most likely to be the same as before. However, this may not be sufficient if the objects can move backward as well as forward.

- When the expected direction of motion can change at any moment and the movement is essentially chaotic we can use a Brownian motion algorithm which looks for candidates anywhere within a search radius of a previous position and assumes the closest one is the most likely.

| Linear Regression |

|

| Conal Angle |

|

| Brownian Motion |

|

The size of the search radius, in all cases, must be set in line with the expected movement from one timepoint to the next, bearing in mind the need to reduce this distance to a practical range during the acquisition of the images.

When selecting Linear Regression or Conal Angle, additional parameters need to be set.

In both cases we can set the "Max time points" option to define how many time points to use to calculate the direction of movement.

In the case of Conal Angle, we also need to set the maximum permitted deviation from previous directions.

Fusions and Divisions

Sometimes, tracking involves fusions and divisions of tracks.

If fusions are allowed and only one segmented object can be found within the search radius of where two objects were found previously, the algorithm will assume that those two objects merged into a single one, and the tracks will merge.

Likewise, if divisions are allowed and two objects are found in the search radius of a track where only one existed previously, the algorithm will assume that the object divided or split and the tracking will continue along both branches.

Some points of note:

- The algorithm has no ability to differentiate between intended and accidental splits and fusions. To limit the number of errors it is important to optimize the segmentation to reduce the number of unintended splits.

- Allowing fusions and divisions increases the possibilities for error. It is best not to use these options unless the purpose of the tracking task is to identify fusions and divisions.

Additional options relating to tracking fusions and divisions are available and described in the help files.

Weighing

Another method used to optimized tracking when there can be multiple likely candidates within the search radius is to use weighing to prioritize objects that are more similar the the object in the previous time point. Weighing can be used with any features of an object, such as size, shape or intensity values, and multiple features can be used to more specifically prioritize certain objects based on their similarity to the objects in the previous time point.

Note that using weighing can lead to incorrect track identification if the objects undergo significant intensity or morphology changes as they move through the time series. This usually means that using weighing to improve track accuracy is unlikely to succeed when tracking dividing cells for example.

Reviewing Tracking results

Once the tracking parameters have been set as needed the operation can be run and the segmented images will be analysed to create tracks. The outputs are:

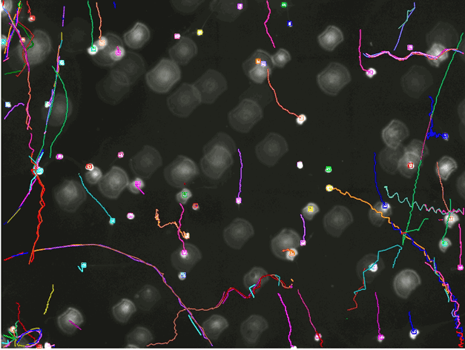

- A visual representation of the tracks on the image that allows the users to evaluate visually whether the results are correct

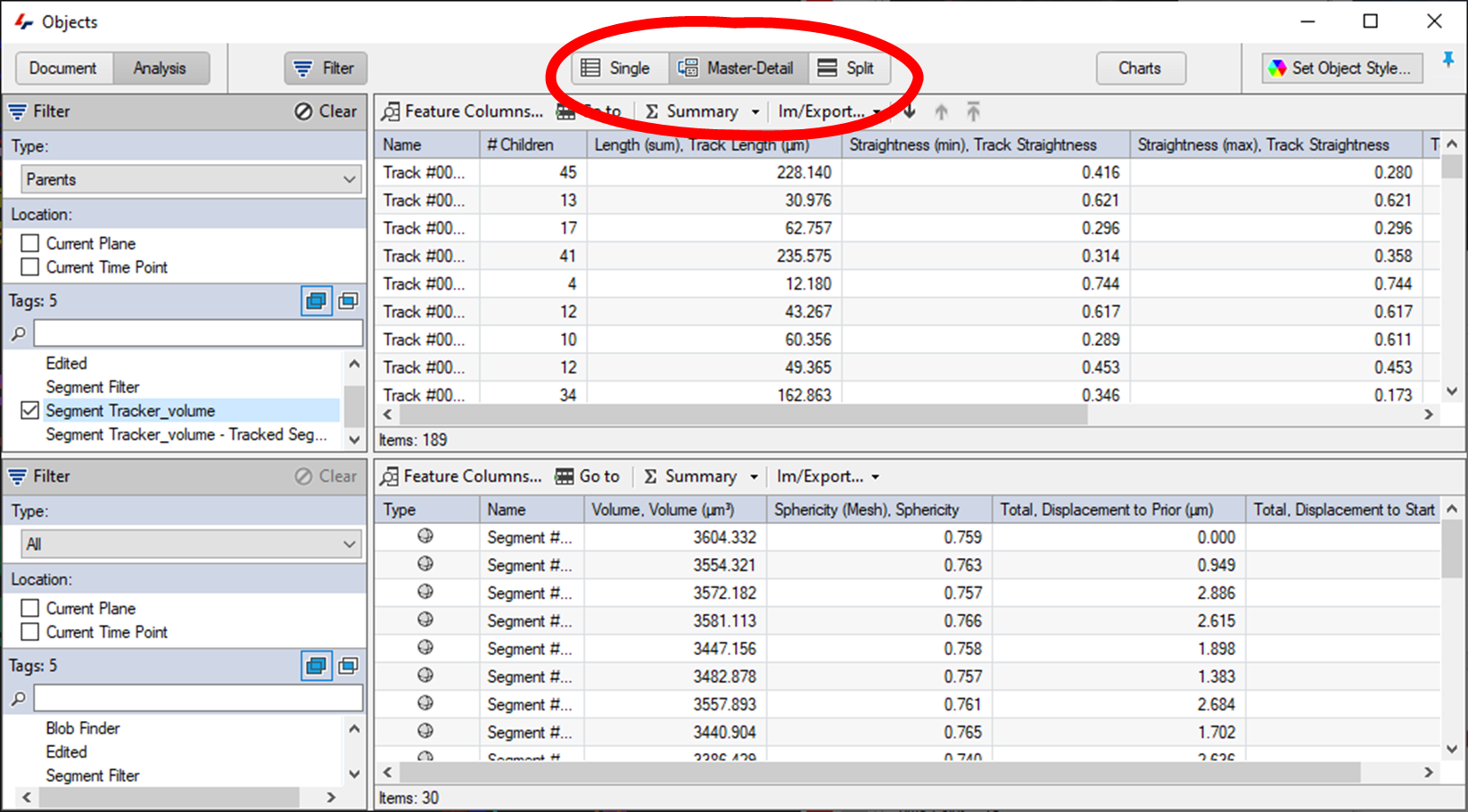

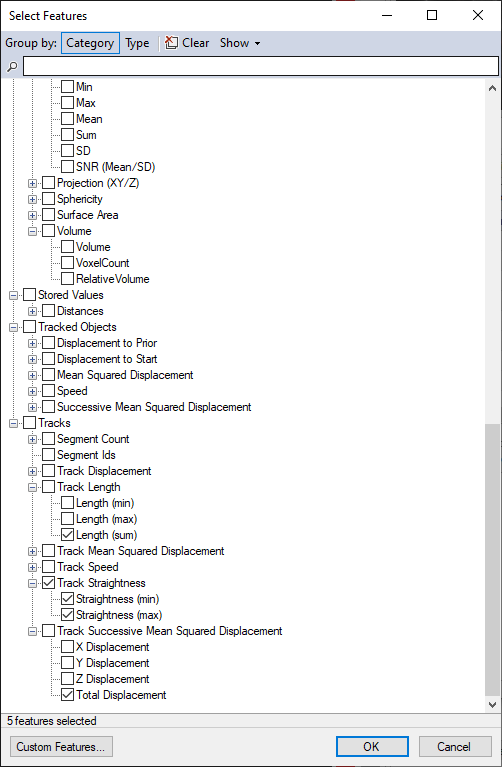

- Entries for each track in the Objects table where the users can review specific features of the tracks, including features of the tracks (displacement, duration, number of segments in the track, etc), and features of the segments in the tracks sorted by the track they belong to.

This enables the user to do a few things.

First, if the tracks appear wrong we can revert the tracking operation, change the parameters so as to try and improve the results, and run the operation again. If no good tracking parameters exist because the image doesn't provide good automatic tracking conditions, manual correction of the tracks is also possible.

Secondly, the objects table can be configured to display features of the tracks and their segments. Since the tracks are essentially groups of objects it is often best to switch the Objects table display to the Master/Detail view, which shows the tracks in the upper table and the segmented objects in those tracks in the lower table.

Each table can then be configured individually to show pertinent information for both tracks, and the segments in those tracks.

Finally, the results can be exported, either as a pipeline operation, or from the Object window's Im/Export... menu.

Editing tracks

The tracking tools in arivis Vision4D are very good, but tracking results are unlikely to be perfect. a range of options are available if the results of the tracking operation are unsatisfactory:

- The first preferred option should be to try and improve either the segment detection parameters or the tracking parameters. Improving the results for the automated tracking reduces the time required to do the analysis and reduces the need for manual interaction.

- If neither the segmentation nor the tracking can be improved any further it may be that improving the acquisition to facilitate the analysis may be the next best option.

- Of course, acquiring new data is often not practical, and in any case, the acquisition results may be the best that are technically achievable, and in that case, manually editing the tracks is also a possibility.

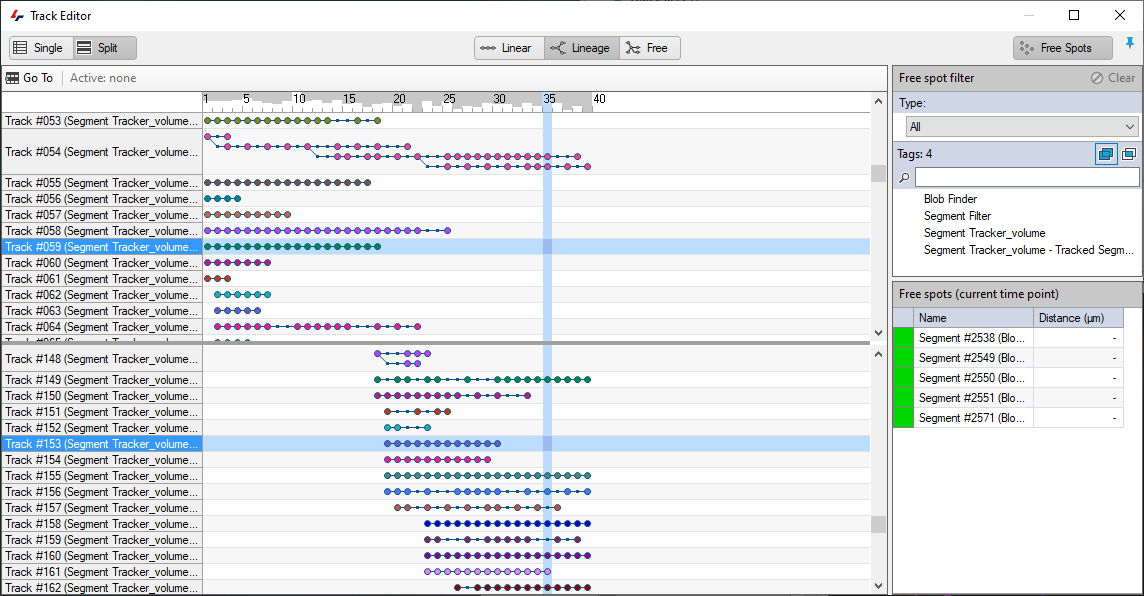

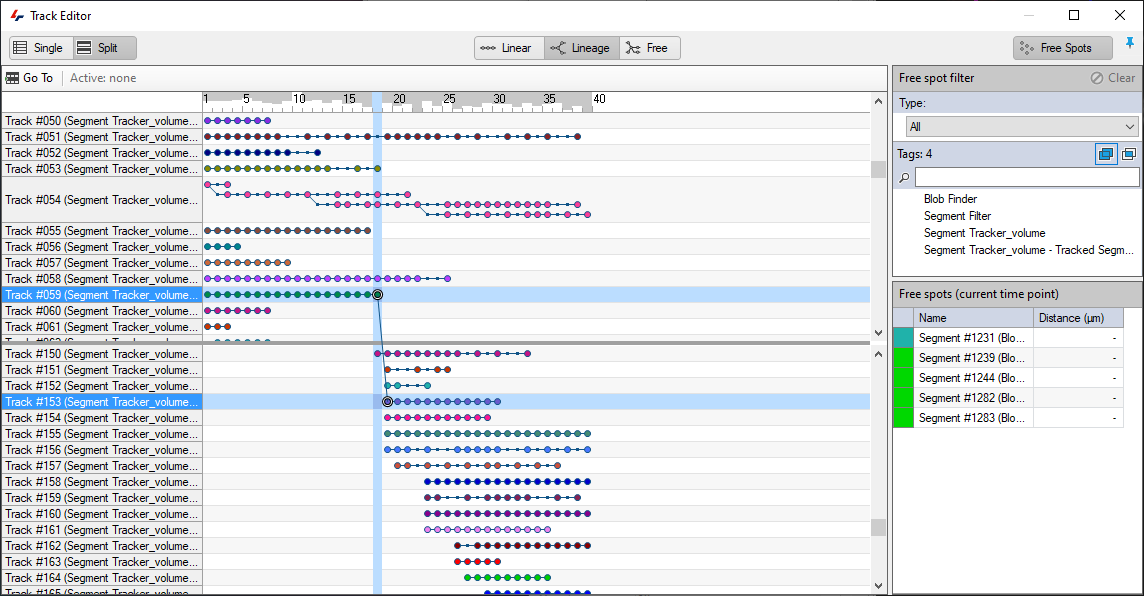

The Track Editor can be found in the Objects menu.

In the Track editor, users can link, split, and merge tracks, as well as assign segments to tracks if they were missed, or manually remove individual segments incorrectly assigned to a track.

In this example, we have two tracks that appear like they should be connected but are not:

In such cases, the first thing to do is double check that they are indeed tracks that should be connected as it could be that they only appear so due to the current visualisation parameters. Tracks could appear to be connected but shouldn't be because:

- The perspective suggests a continuation but a different angle would reveal otherwise

- The two track segments may be temporally divergent and playing back through time to reveal the segments might reveal that they are not in fact connected.

Once the connection is confirmed, editing the tracks is as simple as drawing a line between the last time point where the track was correct, and the next time point.

Further details on track editing are available in the help files.

TLDR

- Optimize your segmentation as much as possible. Tracking is dependent on segmentation results and the better these are, the more accurate the tracking will be

- Try different parameters to see which work best for your data, especially with regards to the motion types

- Only use what you need:

- Reduce the search radius to avoid big jumps in the tracks

- Only use weighing if the segments don't change shape/intensity much over time

- Don't allow fusions or divisions unless this is necessary

- Avoid manual editing tracks to reduce the time required to do the analysis and reduce user biases

- Consider using VR for manual track editing and creation is automated 4D tracking doesn't work for your images