This article outlines the hardware requirements, architecture and deployment of the arivis VisionHub system

Introduction

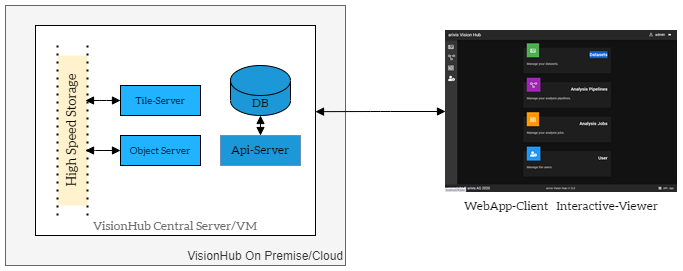

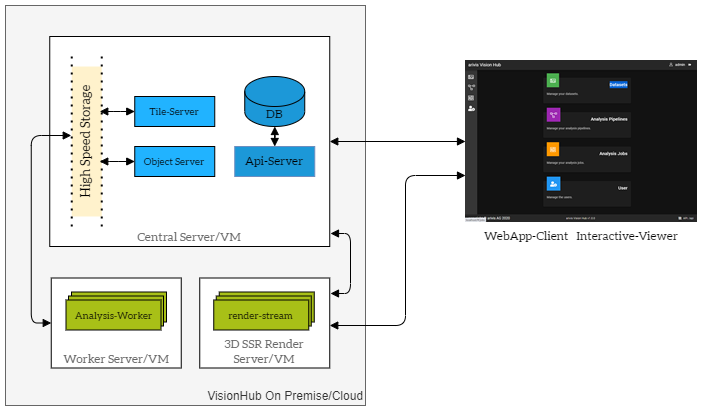

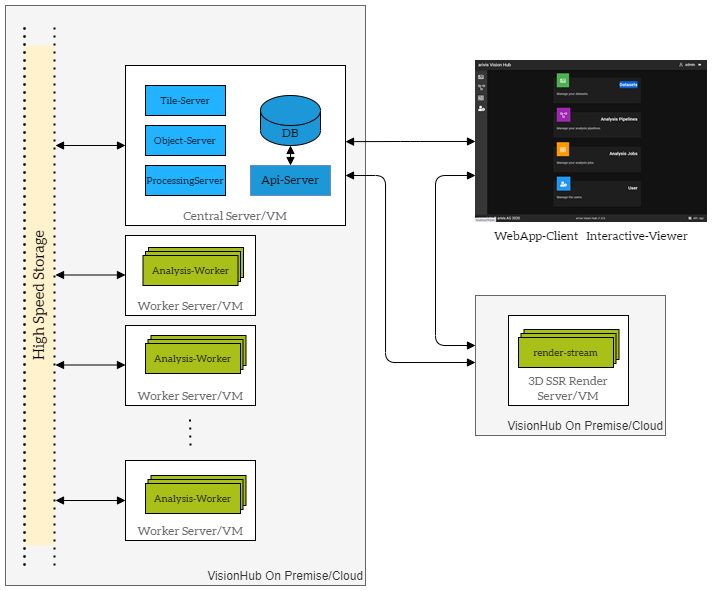

VisionHub is based on a service architecture that allows flexible packaging and rollout scenarios for on-premises or cloud installations (see architecture deployment examples). A VisionHub installation can start with a single server or consist of an extendible, high-performance cluster of servers for different purposes. We propose customers to add at least one additional server when the goal for the VisionHub is scalable computation or 3D server-side rendering (SSR). This ensures that analysis workers or rendering load will not interfere with interactive user experience of the VisionHub frontend.

As not all VisionHub components can be completely restricted regarding memory and CPU/GPU usage, we propose to use dedicated servers for the VisionHub installation and not run other services/applications on these machines. Deployment on dedicated servers ensures prevention of competition for resources (processing, or otherwise) with other non-arivis solutions.

You can use VMs to setup dedicated servers for a VisionHub installation. They allow easy adoption of performance parameters and flexible scaling even after installation.

High-Speed Image Storage

The VisionHub system requires high-speed access to a file system folder. This folder will be the root for all VisionHub related data including all managed images, pipelines, and workflow results. All VisionHub components will directly use this directory when image data is read (during exploration in the viewer or via analysis) or added (results from analysis, uploads via web frontend).

VisionHub supports UNC paths (with user/pass) to mount a file system. As stated above, multiple dedicated servers can be used for specific tasks such as management server, analysis workers or 3D rendering. If multiple VisionHub servers are deployed, one could share the central folder to these other servers, or all servers can use a central network file system.

VisionHub will NEVER alter image data that is stored and expects that no external processes are allowed to alter data within this folder. However, images might have a read lock when VisionHub components are working on a specific file.

VisionHub can automatically watch and reflect changes in the image storage file system. For large volume acquisitions and to avoid copying of large image data, devices or users should directly write to this directory. The file system permission handling and the acquisition workflow ensures that no data is modified or deleted.

Deployment examples

The following deployment examples show basic options for VisionHub deployment on-premises or cloud installation.

Single server, simple installation

Multiple servers, analysis + internal 3D server-side render (SSR) server

High performance analysis cluster, external 3D SSR render server

Client Requirements

- Web Browser (Firefox, Chrome, Edge, Safari etc.)

Server Requirements

Server Requirements (Central Server)

- Windows Server 2012/2016/2019

Fast access to image storage (gigabit ethernet) or local hard drives for images - VisionHub 2.5 GB; SQL Express 6 GB; NodeJs 10GB

- 32 GB main memory

- 8 Core CPU

- VisionHub components: database, api-server, webapp, tile-server, import-worker

Server Requirements (Analysis Worker)

Each CPU core pack allows the analysis workers to use an additional 16 Cores. For parallelized processing, workers can be deployed on multiple servers. As stated above, each worker should ideally get its own dedicated server so that it can use all CPU/GPU power that is available. It is possible to configure a server to use multiple analysis workers, but note however, they will compete for memory and CPU/GPU power.

- Windows Server 2012/2016/2019

Fast access to image storage (gigabit ethernet) or local hard drives for images - VisionHub 1.5 GB; NodeJS 10 GB

- 32 GB main memory

- Optional: local temporary storage space: X TB

- If image modifying operators such as pre-processing operations are required in the analysis pipeline, then each worker will create temporary data for each image modifying operator. Therefore, each worker requires space for this temporary data. For example, if you plan to analyze a 100 GB file with a complex pipeline that has 3 pre-processing operations (2 denoising and one correction filter) this would require 300 GB temp space for each worker or workflow run. Note, this will not always be required and depends on the pipeline being used.

- Another option for handling temporary data storage is to use a high-speed central storage for the cache folder.

- CPU Cores: The number of cores required depends on the number of required workers. Each worker requires one core pack utilizing 16 cores.

- Optional: GPU

- Analysis workers can utilize available GPU for certain operators. GPU is only required when a machine learning operator is being used in the pipeline. If no GPU is available, the worker will use CPU instead. The GPU must be on the analysis worker server; it cannot use the GPU on the render server.

Server Requirements (3D SSR render server)

The 3D SSR render server gets the volume data for the images to render via the TileServer interface and does not require direct access to the central image storage. This allows deployment of the render server outside the intranet and allows for the possibility to use mixed cloud/on-premises installations for this component.

Each 3D SSR render server can provide multiple render streams. Graphics Memory and power will be split. E.g. with an 8 GB graphics card you could either provide one stream with 8 GB possible volume load, or two streams with 4 GB possible volume load. This decision should be discussed with your account manager in preparation for deployment, and advisement can be provided based on expected use.

- Windows Server 2012/2016/2019

- VisionHub 1 GB; NodeJS 10 GB

- Local cache: X TB (depending on the number of images)

- Required for caching tiles before rendering. If the system is configured to provide a single stream with 8 GB of volume load, then each dataset might load up to 8 GB of image tiles from the VisionHub tile server. To avoid re-loading, we suggest choosing a cache size based on the number of images on the system. E.g. if there are 4 images and you have a 8 GB graphics card you would need 32 GB of cache; 500 images X 8 GB = 4 TB etc. The more images, the greater the cache volume needed.

- Another option is to use a high-speed central storage for cache, but this is only possible if installed in same intranet.

Graphics Card

The VisionHub renderer is based on the NVIDIA OptiX ray-tracing engine (https://developer.nvidia.com/optix ) version 7.2. It is necessary to have a NVIDIA graphics card with a current driver installed.

Minimal

- NVIDIA P4000 8GB or above

- NVIDIA GeForce 10xx (8GB)

Recommended

- NVIDIA GV100 64GB

- NVIDIA QUADRO RTX 5000 or above

- NVIDIA 20xx (10GB)

- For large surface visualizations, we recommend using RTX RTX hardware drastically improves the performance of surface rendering. However, it does not have an influence on the volume rendering.

Known Issues

- We use the NVIDIA hardware encoder for real-time video encoding. On a consumer graphics card (and even on a professional graphics card), the number of concurrently used encoders is limited.

- If you plan to use Virtual Machines (VMs) make sure that the GPU and graphics card can be handled by the virtualization stack. VMware provides a commercial option, which allows for splitting of the GPU. HyperV on the other hand, can assign the complete graphics card to one VM, but it cannot share the GPU among multiple VMs.

Customer Deployment Information

Required Accesses for Efficient Deployment:

- For both On-Premises and Cloud installations:

- Provide VM/Servers with the following accesses and connections:

- All VisionHub servers must be in the same subnet and visible to one another. Firewall rules must allow UDP and TCP communication between them. If this is not possible, this must be discussed with your account manager and technical team before deployment to ensure an efficient workaround, accurate accounting for realistic deployment time, and allow for appropriate installation and configuration charges.

- All VisionHub servers (except 3D SSR server) must be connected to the central image store.

- The central server must be accessible by the expected clients.

- Provide VPN connection to VisionHub servers:

- This accessibility is mandatory for efficient deployment for both parties. If VPN connection cannot be provided to the VisionHub servers due to security restrictions, this must be discussed with your account manager and technical team before purchase, as additional installation and configuration fees will be charged.

- Provide example datasets with pipelines for testing of installation in final infrastructure. If this cannot be provided, our team can provide appropriate use cases for this purpose (see next section).

- Technical contact for support on customer site. It is possible our deployment team will require technical support for customer infrastructure during installation. To avoid delays in the event this is needed, a technical point of contact must be designated before planned deployment.

- Optional: LDAP/OAUTH2 integration: If this is required, please consult with our technical team to ensure appropriate access information is provided for deployment.

- Provide VM/Servers with the following accesses and connections:

Average Expected Efforts for Technical Onboarding:

- 2-3 hours for setup of central server, provided all information for external sources is available (e. g. password, paths, usernames, etc.), and are proven to be compatible with VisionHub (this should be ensured before planned deployment, e.g. access to authentication system)

- 30 minutes for setup of each analysis worker

- 1-4 hours for testing with arivis and customer data

- 1-2 hours for final demonstration with key stakeholders and technicians

User Training:

- VisionHub training will take 1-2 hours.

If V4D training is required, this will be scheduled with an application engineer and timing will be based on need, use cases, and purchased support level tier.

Security Aspects

Authentication and Authorization

The VisionHub Api-Server manages users and all authenticatation/authorization tasks. All client communication is handled by the Api-Server and forwarded to sub-systems (Tile-Server, Objects-Server etc) only after successful authentication and authorization. All client communication is hidden behind a proxy-component that takes care of HTTP/S communication to external clients. VisionHub-Users are required to login via the WebApp-Client. A JWT session cookie is used to allow secure communication between WebApp-Client/Interactive Viewer and Api-Server.

The API-Server utilizes a configurable role-based model (default roles: admin, manager, user) to manage users. External authentication authorities (LDAP, AD, OAuth2) can be integrated.

For Authorization the API-Server combines:

-

RBAC/permission authorization: configurable application/user rights that are not bound to a specific entity (e. g. userXX/roleYY is allowed to upload/register files), and

-

entity specific ACLS: (e. g. userXX/roleYY is allowed to view dataset ZZ)

The WebApp-client provides a permission UI for each authorizable entity to define it’s ACLs.

Web Application Security

arivis ensures that all VisionHub’s publicly available routes pass the current OWASP TOP 10 security risks check list.